By: Zachary Everett, CAEP Communications Associate

February 4, 2016

As of this writing, it’s mid-January. For three of our staff members, this is a month when a do-not-disturb sign should hang from their office doors (but does not). That’s because every January and July, the Program Review team pores over hundreds of program reports for tech editing. It’s a feat that requires concentration, long hours, collaboration, and computer eye strain. I didn’t actually see a do-not-disturb sign on their office doors, so I sat down with the Program Review team to understand what tech editing is all about.

Collaboration is key to get a program report to the tech editing stage. Educator Preparation Providers (EPPs), Specialized Professional Associations (SPAs), and volunteers work together. Each SPA sets its own content standards—such as English, Technology, or Mathematics—and identifies content experts who have the credentials and experience in subject-specific educator preparation. SPA coordinators work with Program Review staff to orchestrate this process each season.

Hear about program review from NCTE Reviewer, Dr. Paul Yoder.

Hear about program review from NCTE Reviewer, Dr. Mike Wallace.

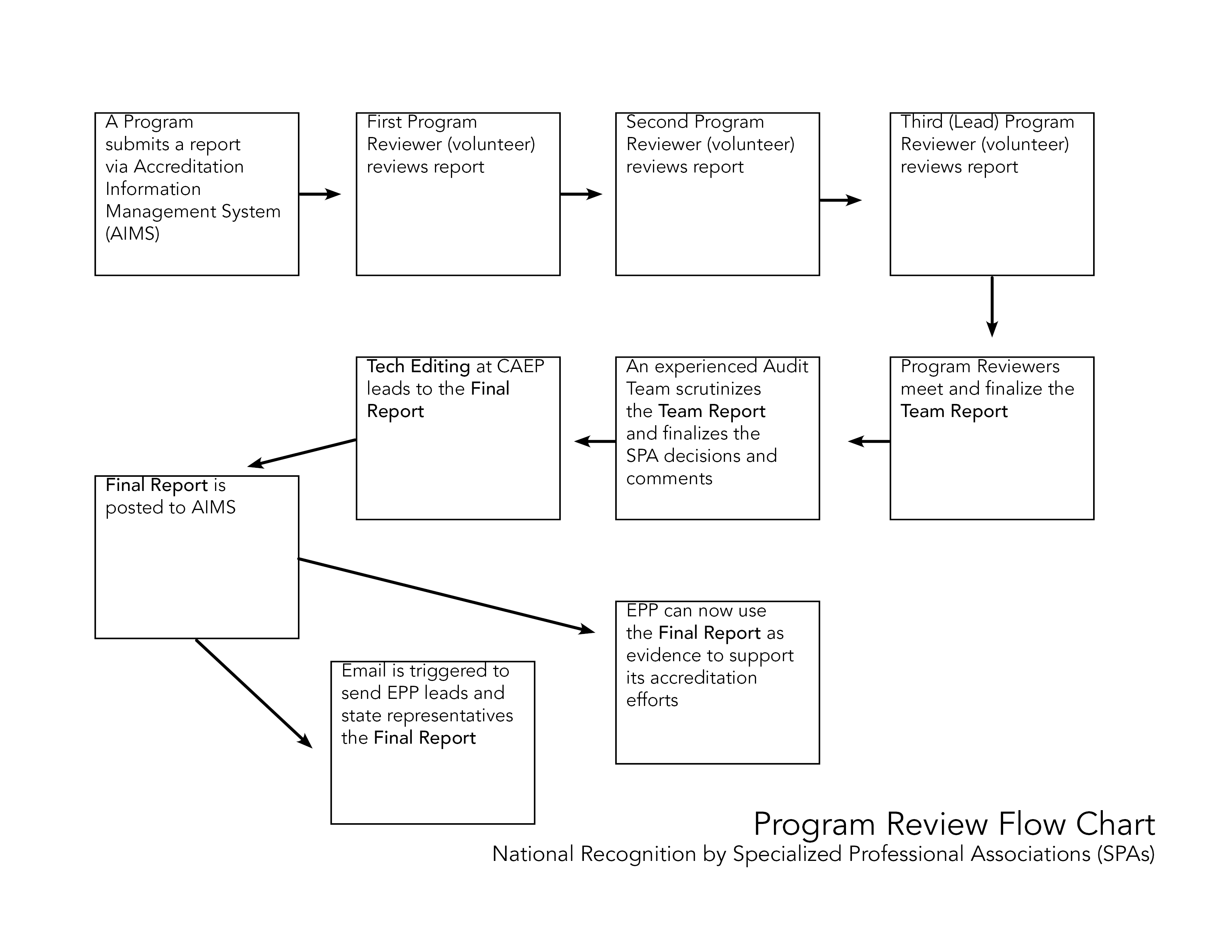

Here’s how it works: First, an EPP compiles and submits its program report via CAEP’s Accreditation Management System (AIMS). Then a team of volunteer program reviewers independently examine the narrative and evidence. Each program reviewer completes a report. The individual program reviewers then meet to discuss their findings, come to consensus, and produce a Team Report. The Team Report goes through an additional stage of scrutiny by the SPA Audit team. The Audit team then completes a report. The final phase of the program review cycle is tech editing, wherein our Program Review team invests a solid month getting every report as close to perfect as possible—they ensure that the reports are complete and consistent, and policies are always followed.

Collaboration remains key to the end of the process. Monica Crouch, Accreditation Associate, said “these months may be stressful, but this is important: making a difference at the program level so we can prepare quality P-12 teachers. This shared value is why it is easy to collaborate with SPA coordinators, EPPs, program reviewers, and CAEP’s Information Technology staff.”

Our Program Review team ensures CAEP’s policies and procedures are followed; they crosscheck for data match, decision statuses, and timelines; and, when needed, they clean up the grammar and tone of the reports. “It comes down to propriety, professionalism, policy, and procedures,” said Banhi Bhattacharya, Senior Director of Program Review.

Okay, I’m starting to get it. But now I have new questions.

• “So how many program reports are we talking about?” Sabata Morris, Senior Accreditation Associate, informed me that it depends on the season; usually we receive 800-1,350 program reports. This season that number is 922.

• “How long do these take?” Again, it depends. The initial review of a typical report may take about an hour, but the finished product (the Final Report) might take several hours of brainstorming and discussions among reviewers, auditors and CAEP staff.

• “How do you divide the work among three people?” Dr. Bhattacharya said that this big pool of work gets channeled among the three Program Review staff, two knowledgeable consultants, and the ever committed Elizabeth Vilky, CAEP’s Senior Director of State and Member Relations who has years of experience in Program Review. This season, each Program Review staffer has just shy of 200 reports to tech edit.

As the volume of work started to sink in for me, I asked why they do all of this. The reaction—from all three—to this question seemed just short of stunned that I would even ask. It’s because the quality of this work matters!

• Crouch: “It’s about quality assurance. EPPs do a lot of work. We want to make sure they receive a quality final report they can use as evidence for accreditation.”

• Bhattacharya: “The stakes are high for EPPs. We need to make sure the high content standards set by the SPAs are implemented and, ultimately, the reports are understandable and useful.”

• Morris: “SPA Program Review is part of the accreditation process in states that require it, and in other states many EPPs opt to do it to make sure their programs are high quality. This is a great way to give them the best feedback we can.”

It’s now February, so how did they do this time around? Of the 922 reports, 98.8% were completed on deadline; only five reports remain, and their final details are now being clarified at the SPA level. I applaud our volunteers, SPA coordinators, and the Program Review team for managing this feat season after season.